One of the chapters of the book-in-progress, as mentioned previously, takes the widespread use of statistics in sports as a starting point, noting that a lot of the techniques stat geeks use in sports are similar to those scientists use to share and evaluate data. The claim is that anyone who can have a halfway sensible argument about the relative merits of on-base-percentage and slugging percentage has the mental tools they need to understand some basic scientific data analysis.

I’m generally happy with the argument (if not the text– it’s still an early draft, and first drafts always suck), but it’s by no means perfect. Sports statisticians deal well with a lot of numbers, but there are some key concepts that consistently cause problems, mostly having to do with the essential randomness of sports.

I was struck by this this morning when watching a Monday’s episode of Pardon the Interruption with The Pip (link goes to an audio archive file). About five minutes in, J.A. Adande, talking about the question of whether Chris Davis should compete in the Home Run Derby at baseball’s All-Star Game, because:

…the history indicates that twice as many of the participants have gone down afterwards, they’ve seen their power numbers decrease rather than increase after the home-run derby.

(my transcription of the audio at the link). This immediately jumped out at me, having spent an inordinate amount of time futzing around with baseball statistics in the recent past. While it’s really tempting to impose narrative causality on this– Tony Kornheiser goes on to talk about how competing in the HR derby can mess up a swing– there’s really no need. This is a really simple and clear example of regression to the mean.

The basic idea is simple: if you take a large sample of random processes– the time required for excited atoms to decay, stocks fluctuating in price, baseball players hitting home runs– and look at them over a long interval you will find that each has some natural average. Over a shorter interval, though, some of them will exceed that average value purely by chance– some atoms will decay more rapidly, some stocks will go up more than down, and some hitters will hit more home runs. If you track the high-performing subset over subsequent intervals, though, you will find that the total average comes down, approaching the very-long-term average (as it must). This is NOT the mythical “Law of Averages,” by the way- the overall probability of a particular outcome does not change based on past performance. Rather, it’s an inevitable consequence of the statistical fluctuations of small-sample averages.

The set of Home Run Derby participants is a classic example of regression to the mean. How do you get picked to compete in the Home Run Derby, after all? By hitting a lot of home runs in the first half of the baseball season. That’s the very definition of exceeding average performance in a short interval, so of course you will see a lot of those players regress to the mean in the second half of the season. There’s nothing remotely surprising about this, and no need to invent stories about swing changes. Somebody with more formal knowledge of statistics than I have could probably even make a prediciton about whether that two-to-one ratio of decreases to increases makes sense.

Regression to the mean is kind of a subtle point, though, and one that eludes even people with vastly more mathematical sophistication than Tony Kornheiser.

And, of course, if you’re talking about probabilities in baseball, there’s no way to avoid the subject of batting average, which I was writing about last week. In the course of that chapter, I mentioned that one thing scientists do that sports statisticians don’t is to report uncertainties. (Though I argue there’s a small effort made to account for the idea, by requiring a threshold number of attempts for most awards…) Which got me wondering what the uncertainty associated with a baseball batting average would be.

Now, while I have a Ph.D., my stats training is not all that extensive. I do, however, retain a couple of simple rule-of-thumb ideas, one of which is that in any collection of random measurements, the uncertainty in the number that come out in one category or another is roughly equal to the square root of the number being measured. So the uncertainty in the batting average (defined as the number of hits divided by the number of at-bats) ought to be equal to the square root of the number of hits divided by the number of at-bats. The qualifying number of at-bats for the MLB batting title is around 500, and a good hitter will get a hit about a third of the time, so plugging those numbers in suggests an uncertainty of about 0.025.

That struck me as awfully large, given that baseball batting averages are traditionally reported to three digits (thus “batting 300” and so on). An uncertainty in the second digit of that would mean that most of the fine comparisons between players– the batting title is often won by a percentage margin of 0.010 or less– were completely meaningless. So I did what any scientist would do confronted with this: I simulated it to check.

I was being kind of lazy, so I just knocked this together in Excel: I took two “players” each with an “average” of 0.330, and ran 100 “seasons” of 500 “at-bats” for each. Each at-bat was a random number generated between 0 and 1; if it was less than 0.330, they got credit for a “hit,” and if it was greater, nothing. Then I calculated the average for each of those seasons, and looked at the standard deviation of those averages as a measure of the uncertainty.

The results? One “player” had an uncertainty of 0.025, the other 0.021, right in line with my naive prediction. For the record, the one with the higher uncertainty won 58% of their “head-to-head” matchups, and is thus headed to the simulated Hall of Fame in simulated Cooperstown. Which is completely meaningless, just like batting average differences smaller than about 0.020…

The take-home from this: People, even stat geeks, are really bad at dealing with randomness. Even in situations where we know we’re dealing with random processes, we’re prone to trying to impose narrative at a level that just can’t be justified mathematically. Concepts like statistical fluctuations and regression to the mean are really tricky to internalize, even for people with scientific training– like I said, my initial assumption was that I’d done something wrong with the rule-of-thumb calculation.

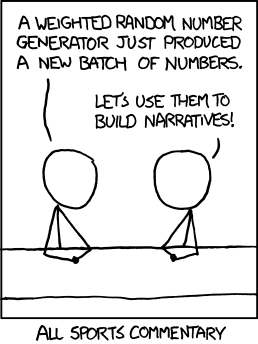

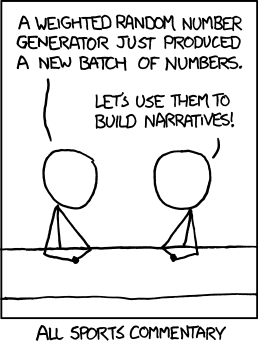

Or, if you just want the TL,DR version of this entire post, I’ll refer you to the classic xkcd that serves as the “featured image” above, which I’ll reproduce below for the RSS readers:

Do you have a reference for your simple rule-of-thumb idea, that in any collection of random measurements, the uncertainty in the number that come out in one category or another is roughly equal to the square root of the number being measured

Oh man, and you like baseball too? Science and baseball are my chocolate and peanut butter.

Anyway, regarding “Somebody with more formal knowledge of statistics than I have could probably even make a prediciton about whether that two-to-one ratio of decreases to increases makes sense.”

Check out

http://www.fangraphs.com/blogs/the-odds-of-chris-davis-matching-roger-maris/

from today’s Fangraphs. Later in the article, it shows how (depending on what Chris Davis’ true HR rate is) he can expect to hit 51+ HR @ ~67.5%.

i.e., without considering anything about whether or not he performed in the Home Run Derby, the probability that his HR numbers decrease – is already “two to one” at a number selected which is significantly lower than his projected pace at this same point.

I’m in the last year (last 9 credits, actually) towards a chem degree, and last semester I took a 400 level “stats for science” class. It was easily the most useful non-chemistry course I ever took. I thoroughly recommend it to any current science students among your readers.

Do you have a reference for your simple rule-of-thumb idea, that in any collection of random measurements, the uncertainty in the number that come out in one category or another is roughly equal to the square root of the number being measured

It comes from the fact that a Poisson distribution leads to fluctuations about the mean value that have a standard deviation exactly equal to the square root of that mean. While not everything is a Poisson distribution, you usually don’t go all that far wrong by taking it as a baseline.

Heh, and looking at the data in that article closer, it puts the probability at much worse than “2 to 1” that Mr. Davis’ HR numbers “decrease”. If he is currently on a pace for 62 HR, the probability for maintaining or exceeding that pace is only 6.4%.

In other words, if we want to see more players begin to improve their HR rates after participating in a HR derby, they need to start inviting players who fit a different profile than mashing the ball in the first half.