One of the cool things about working at Union is that the Communications office gets media requests looking for people to comment on current events, which sometimes get forwarded to me. Yesterday was one of those days, with a request for a scientist to comment on the bizarre sports scandal surrounding the deflated footballs used […]

Category: In the News

Uncertain Dots 24

If you like arbitrary numerical signifiers, this is the point where we can start to talk about plural dozens of Uncertain Dots hangouts. As usual, Rhett and I chat about a wide range of stuff, including the way we always say we’re going to recruit a guest to join us, and then forget to do […]

Nobel Prize for Blue LEDs

The 2014 Nobel Prize in Physics has been awarded to Isamu Akasaki, Hiroshi Amano and Shuji Nakamura for the development of blue LED’s. As always, this is kind of fascinating to watch evolve in the social media sphere, because as a genuinely unexpected big science story, journalists don’t have pre-written articles based on an early […]

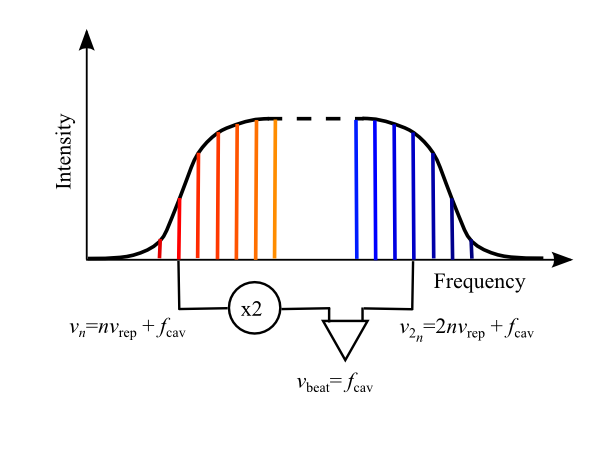

Finding Extrasolar Planets with Lasers

On Twitter Sunday morning, the National Society of Black Physicsts account retweeted this: Using Lasers to Lock Down #Exoplanet Hunting #Space http://t.co/0TN4DDo7LF — ✨The Solar System✨ (@The_SolarSystem) September 28, 2014 I recognized the title as a likely reference to the use of optical frequency combs as calibration sources for spectrometry, which is awesome stuff. Unfortunately, […]

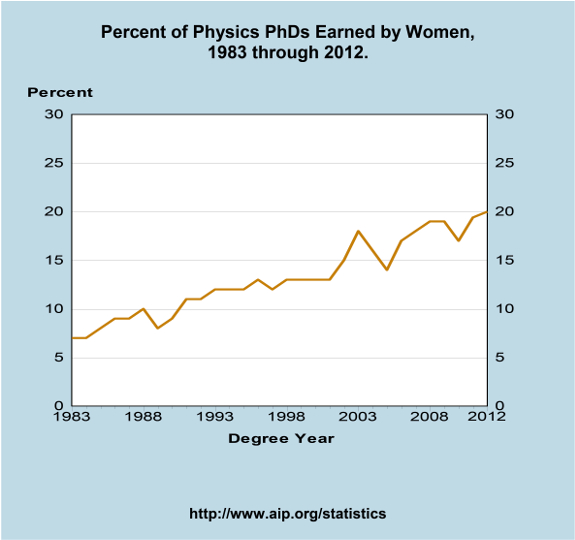

Women of the Arxiv

Over at FiveThirtyEight, they have a number-crunching analysis of the number of papers (co)authored by women in the arxiv preprint server, including a breakdown of first-author and last-author papers by women, which are perhaps better indicators of prestige. The key time series graph is here: This shows a steady increase (save for a brief drop […]

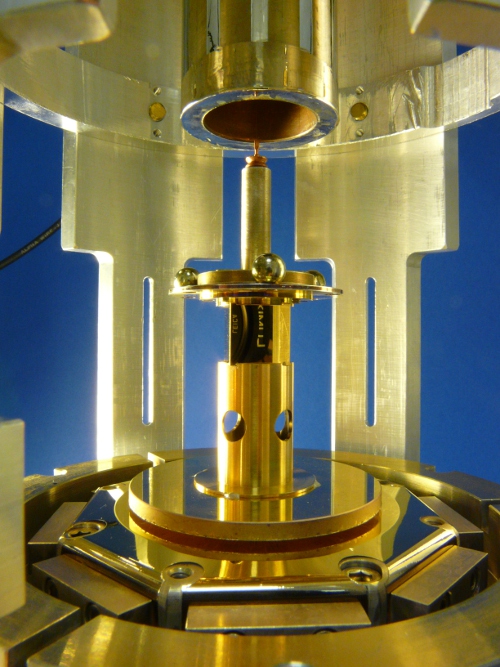

Impossible Thruster Probably Impossible

I’ve gotten a few queries about this “Impossible space drive” thing that has space enthusiasts all a-twitter. This supposedly generates thrust through the interaction of an RF cavity with a “quantum vacuum virtual plasma,” which is certainly a collection of four words that turn up in physics papers. An experiment at a NASA lab has […]

Two Cultures of Incompressibility

Also coming to my attention during the weekend blog shutdown was this Princeton Alumni Weekly piece on the rhetoric of crisis in the humanities. Like several other authors before him, Gideon Rosen points out that there’s little numerical evidence of a real “crisis,” and that most of the cries of alarm you hear from academics […]

What Scientists Should Learn From Economists

Right around the time I shut things down for the long holiday weekend, the Washington Post ran this Joel Achenbach piece on mistakes in science. Achenbach’s article was prompted in part by the ongoing discussion of the significance (or lack thereof) of the BICEP2 results, which included probably the most re-shared pieces of last week […]

On Black Magic in Physics

The latest in a long series of articles making me glad I don’t work in psychology was this piece about replication in the Guardian. This spins off some harsh criticism of replication studies and a call for an official policy requiring consultation with the original authors of a study that you’re attempting to replicate. The […]

“Earthing” Is a Bunch of Crap

A little while back, I was put in touch with a Wall Street Journal writer who was looking into a new-ish health fad called “earthing,” which involves people sleeping on special grounded mats and that sort of thing. The basis of this particular bit of quackery is the notion that spending time indoors, out of […]